System Overview

The purpose the system serves is to combine mobility assistance with way-finding. Thus, the intended setup of the product is to be available for use for travelers after passing airport security. From there, IAN can scan the traveler's boarding pass, provide them with their flight information and take them to their desired destination in the airport.

System Features

Autonomous Navigation

Our system features fully autonomous navigation. Designed with safety and efficiency in mind, it finds the best path to the destination while also integrating obstacle avoidance and smart pathing. This ensures that all requests are fulfilled quickly and that the user is safe and comfortable for the whole duration of the journey.

Smart UI

IAN is operated through our smart UI. Built to satisfy the latest user experience guidelines, our smart UI is accessible to everyone, featuring: large icons, easy-to-follow text, real-time flight information, live notifications and daltonism friendly color schemes.

Safety & Comfort

Comfort and safety are our utmost priorities. As such, we worked very closely with experts to design a product that, not only is secure and safe, but also provides the user with much-needed comfort.

A new study by the Transportation Research Board’s Airport Cooperative Research Program shows that elderly passengers’ ability to navigate airports is negatively affected by various aging-related issues. Two of the most prevalent challenges aging travelers face in airports are way-finding and fatigue.

2. Growing Demand for Special AssistanceAccording to the 2017/18 Accessibility Report, in 2017 alone, over 3 million requests were made for assistance at UK

airports. That number is only expected to rise at a rate of around double that of the general passenger growth.

Furthermore, the 2018/19 Accessibility Report reports that a

record 3.7 million passengers were assisted at 31 UK airports between 1 April 2018 and 31 March 2019.

Since 2014 the number of passengers assisted increased by 49% while overall passenger numbers increased by 25%.

Current arrangements in UK airports have proven to be quite poor in terms of customer satisfaction.

The 2017/18 Accessibility Report states,

"Disappointingly we have classified London Gatwick, London Stansted, and Birmingham as 'needs improvement'

and one airport, Manchester, as ‘poor’. Information provided to us shows that disabled passengers and those

with reduced mobility took significantly longer to move through the airport than other passengers, with an unacceptable

number of disabled and reduced mobility passengers waiting more than twenty minutes for assistance with, in some cases,

passengers left waiting for assistance for more than an hour".

The tells us that the current means of assistance do not ensure a 100% customer satisfaction as they heavily rely on human involvement.

While there are several robotic assistants on the market, none of them are targeted specifically towards the elderly. Examples of such products include KLM Care-e, which can assist with carrying luggage but this does not address the issue of exhaustion while navigating a large airport. Another example is that of AIRSTAR, the robotic assistant present at Incheon, South Korea. While it is able to give indications to travelers and even guide them to their destination, it still does not address the aforementioned issue.

System Use Cases

Use Case 1

John is 65 years old and he traveled for hours to reach the airport for his flight.

He is feeling exhausted and the airport is large. His gate and restaurants are a long way

from airport security and he would appreciate some help getting there.

However, he did not request special assistance prior to his arrival, and hence does not have priority and will have to wait until a member of staff becomes available.

John uses IAN to take him to any point of interest within the airport without the need for human involvement.

Use Case 2

Jane is 70 years old and has just arrived in the airport for her flight.

Most airports make her nervous as she is always having a hard time navigating them, in particular she struggles with finding her way to

the restaurants and toilets. Jane uses IAN, chooses her desired point of interest and she is comfortably taken there.

She is relieved that she doesn't need to ask around or keep looking at the signs to find her way. IAN has also informed her of a change in her flight status,

saving her the stress of constantly looking out for new updates from airport staff.

System Functionality

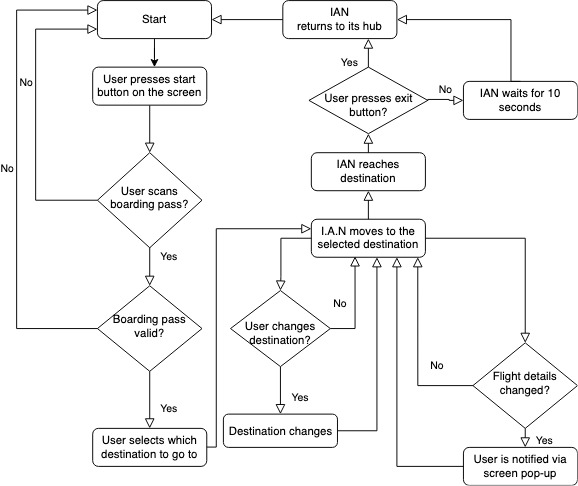

The intended functionality of our system is demonstrated by the flow chart below.

System Components

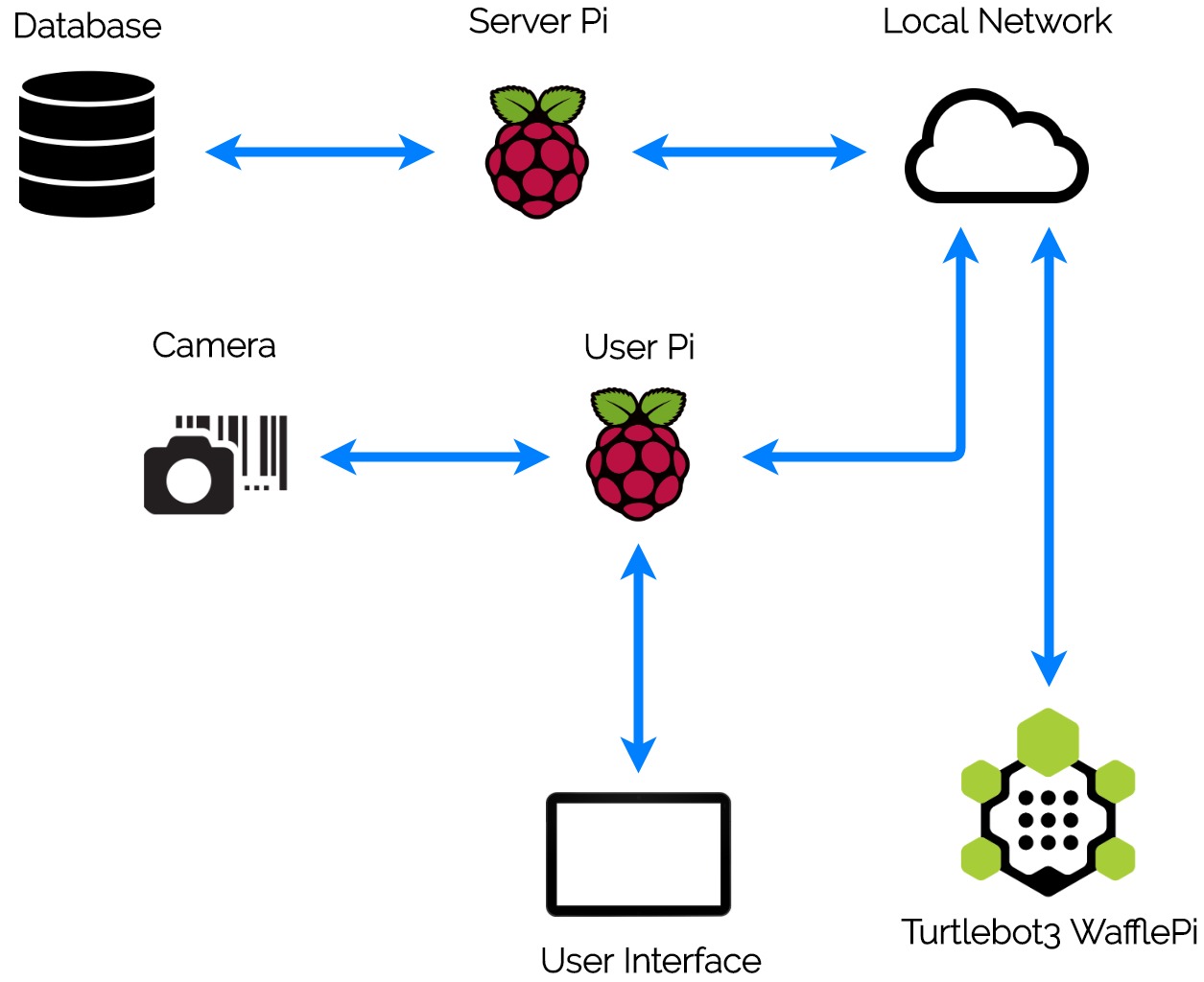

Our system is currently composed of the following components:

• The Turtlebot and its Raspberry Pi - contains the core navigation logic.

• The User Pi - a Raspberry Pi needed by the Turtlebot Pi to act as the ‘master’ for the Turtlebot’s navigation.

• The Server Pi - a Raspberry Pi that contains the database and acts as an airport server.

• The UI - lives on the User Pi and is displayed on a 7" touchscreen.

• The Camera - acts as a barcode scanner; scans boarding passes, and is connected to the User Pi.

• The User Seat - built on top of the Turtlebot.

System Demonstration

On the left, you could find a video demonstrating how our system works.

On the right, you could interact with our UI that is demonstrated in the video.

User Guide

You can have a look at our user guide for building and trialling the prototype of the system.For the 4th sprint, pre-COVID-19, we had started preparing a Python script that recieves a video stream from a camera located in the gap between the back support of the user seat and the seat foundation. The purpose of this setup is to detect when the user is sitting down and when they are not - depending on the light intensity the camera recieves. This was supposed to be a safety feature to ensure the robot never moves when it's unsafe to do so.

Navigation EnhancementsDuring our scalability research we came across a more efficient Navigation algorithm, which will allow our robot to navigate obstacles in a safer, more realistic way – given a sufficient amount of time and resources. Our research suggests that Generalized Voronoi Diagrams (GVD) could be applied to Turtlebot navigation in ROS. Without going into technical details, this research concluded that the current implementation, using Dijkstra’s method, might force the robot to abort if an obstacle were to appear when the robot was turning, whereas the GVD algorithm would plan a safe route around it. This research is particularly relevant to our system since airports have many corridors, and thus IAN would have to make turns frequently.

Navigating Multiple FloorsA potential further development of IAN is to navigate buildings with multiple floors. Multiple airports host restaurants and shops on different building floors than the departure gates. One way to do this is to incorporate IAN's functionalities with airport lifts, although this would require further research.

Multiple LanguagesAvailable only in English now, one of the next steps we intend to take is to make our product accessible for everyone from around the globe. For this, we plan to integrate many more languages, such that any traveler, no matter which language they speak, would have a similar level of accessibility to IAN.

Voice commands & Text-to-speechWhile designed with the elderly in mind, our product range could be expanded to provide assistive services to other groups. One of our main future goals is to make airports more comfortable for those who are visually impaired. With some modifications, we plan to adapt the current product to include voice accessibility and screen reading such that those who have sight difficulties would have a similar level of accessibility to IAN

Market TransferabilityAs of now, our primary customers are airports. However, IAN can easily be adapted to satisfy different market segments. From insurance companies that provide services to injured workers to firms that want to make their offices accessible to their disabled employees, we can vouch that IAN has the potential to fulfill all requests of special assistance in a building environment.

Airports provide many accessibility features but sometimes they can be hard to access and may need booking in advance. IAN provides assistance as soon as you pass security without needing a reservation.

Attracting More CustomersAirports can be daunting to those with fatigue challenges and way-finding difficulties. Making airports more accessible and easy to navigate can only encourage those customers to air travel more frequently.

Reduced CostsUnder European Law airports are required to provide special assistance to passengers, free of charge. Each airport calculates a passenger with reduced mobility (PRM) charge which is included in the airport tax. Our product can help passengers move to specified locations without the need for staff involvement and help airports reduce special assistance related costs.

After analyzing the average salaries of on-the-ground airline staff (of which those providing the wheelchair service are a subset) and estimating the likely costs of our products' manufacturing and maintenance, we speculate that having IAN as part of an airport's ecosystem could reduce net costs by hiring less airline staff to provide such assistance. Further details in the Business Plan

User Interface

Users interact with IAN using a simple, modern-looking UI on a Pi touchscreen. We created the UI using Qt, a free, open source, cross platform toolkit, and its Python bindings PyQt.

We designed the UI using the QtDesigner tool and integrated the backend code with the other functionality of IAN (scanning, database retrieval, navigation) with several Python scripts.

We focused on usability - using a large sans serif font for readablity and symbols over words.

We chose colours suitable for colourblind users, and included a help page and a call for help button. These steps ensure IAN is

intuitive as possible for the elderly.

You can interact with our UI on the right.

Autonomous navigation is the most significant functionality of our product.

For this reason, it was under regular re-evaluation and improvement.

Basic Navigation Functionalities

1. Test Purpose

The navigation functionality needed to reach an acceptable standard to be formally evaluated against our product's set standards.

2. Test Procedure

Initially, even though the Turtlebot's navigation was functional,

we noticed that the planning and movement of the robot were inaccurate.

The robot failed to reach its given destination, kept hitting the walls and obstacles even though

they were clearly shown on the sensor map, went past the goals without stopping or was unable to assume the instructed orientation.

For us to overcome these issues, the Navigation team had to constantly experiment with the Navigation Parameters (see the "How it Works" section).

With each tuning, we informally evaluated the performance of the navigation and repeated the process.

3. Test Results

After a lot of experimentation and informal evaluation of the navigation behavior,

the team established a combination of parameters that would provide the optimal navigation behavior.

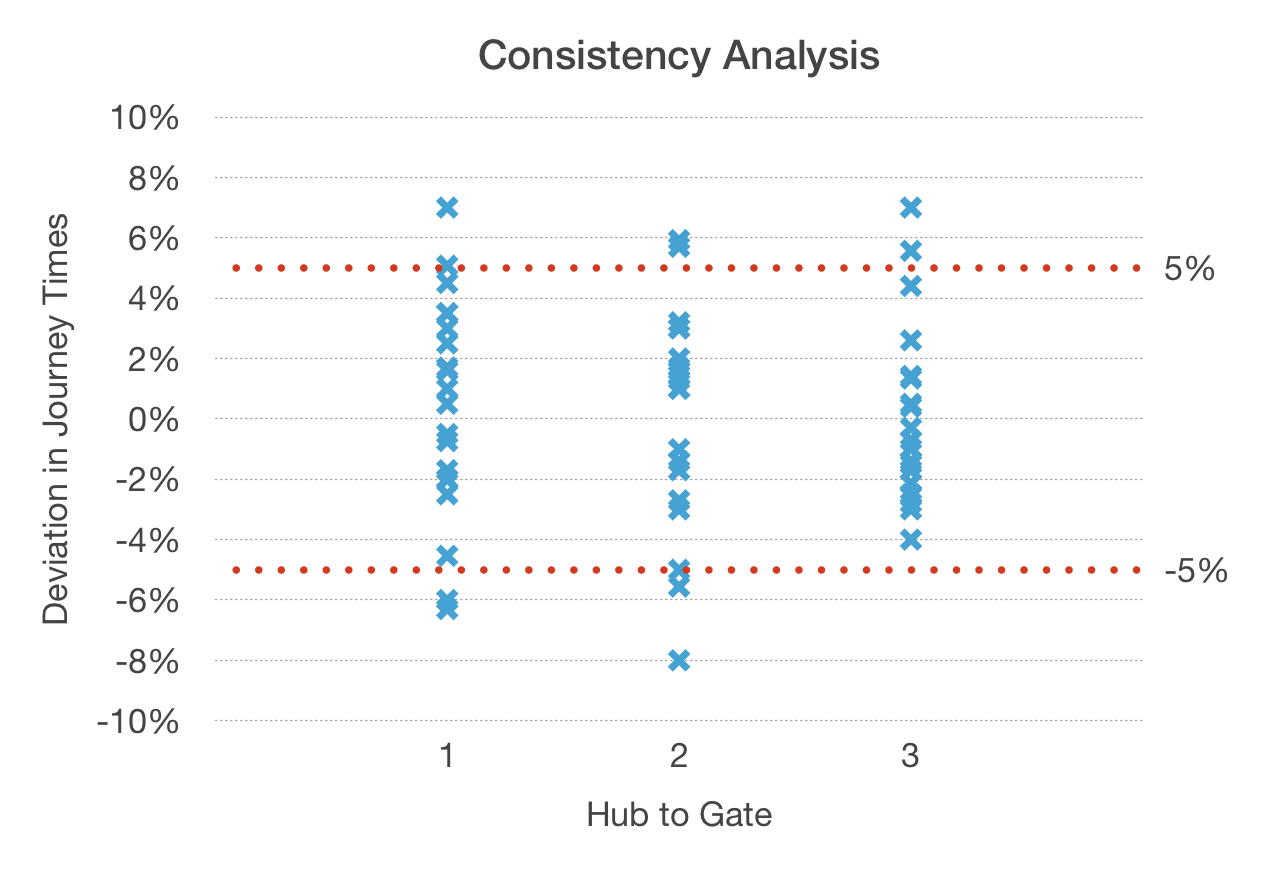

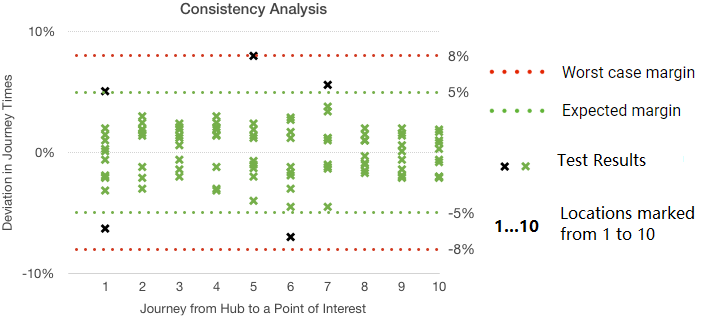

Consistency in Travel Time

1. Test Purpose

The next step was to evaluate the consistency of the travel time of the Turtlebot.

We needed to ensure that the Turtlebot will be consistent in its travel time as well as the path chosen for

any given destination assuming the same initial position and environment. Have in mind that between the second and third sprint,

the user seat and an extra level was installed on the Turtlebot, jeopardizing its consistency. This evaluation would provide insights on

how much the Turtlebot was affected in terms of consistency with the additional modifications.

2. Test Procedure

During the second sprint, we had only 3 destinations available for the Turtlebot whereas, during the third sprint,

our destinations grew to 10. For each hub-to-point-of-interest (where the hub is the starting position of the Turtlebot) journey, 20 tests were carried out to

measure the time taken for the Turtlebot to travel (after receiving the command to move to the destination)

from its hub to the specified destination. We then calculated the average of the travel times for each journey,

and plotted the deviation of each test from its respective average journey time. In the graph on the left, we have the tests for the second sprint,

and on the right we have the tests for the third sprint.

3. Test Results

From the analysis, we can observe that most of the travel times for each journey deviated from their

respective averages in the margin of 5%. Furthermore, the consistency of the Turtlebot in terms of travel time remained stable.

Our product's time consistency is of major importance, as for customers to trust our product in a real-world scenario,

we need to ensure that it is reliable and capable of carrying passengers to their destination on time.

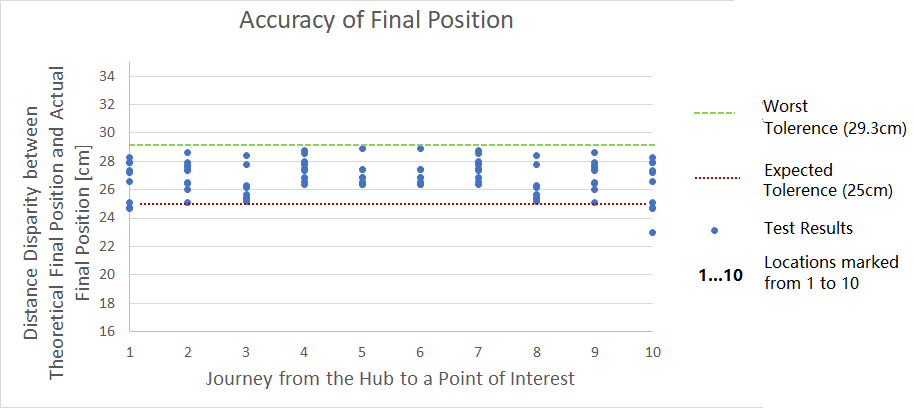

Accuracy of the Final Position for Each Journey

1. Test Purpose

The last step was to evaluate the accuracy of the Turtlebot's final position (coordinates given in the database) from the actual, tested final position for each journey.

Have in mind that during our navigation parameter tuning, we manually allowed the Turtlebot a tolerance of 25cm to reach the destination (using the xy_goal_tolerance parameter),

meaning that within a 25cm radius of the given coordinates the Turtlebot would stop and assume it has reached its destination.

2. Test Procedure

For each hub-to-point-of-interest journey, 20 tests were carried out. When the Turtlebot reached the destination,

we recorded its position and calculated the difference between the given coordinates and the actual coordinates.

Note that the distance the Turtlebot traveled, on any journey, was between 1.5m to 5.5m.

3. Test Results

Our measurements show that the maximum difference in disparity we get is 5cm, which we deem to be acceptable.

In a real-world scenario, our product will be scaled up, meaning that these measurements will change. However,

we estimate that the accuracy of the localization of the Turtlebot will not, as the same principles will be used even

if the distance traveled is larger. We allow a minor tolerance for the Turtlebot keeping in mind that there isn't a

specific spot for the airport point-of-interest but rather a general area for the user to arrive at.

UI User Testing

1. Testing Procedure

We tested our user interface on 5 participants over the age of 50.

We gave context to the UI by explaining what IAN is and what it does physically.

We then asked the participants to think aloud (using the think-aloud protocol) while using the UI and then asked them the

following directed questions:

• Did you find anything confusing or misleading?

• At any point were you stuck?

• What did you think of the design?

• Do you have any other comments or suggestions?

2. Findings

All of the participants found the help pages easy to follow, and at no point were any of them were stuck.

Some of the participants noted their appreciation of how simple the design was. 4 out of the 5 users found

the concept of the pause button confusing, and 2 users were also confused by the map on the UI.

Some users provided suggestions for improvement on the text content of several of the UI buttons or pages,

most of which we have already incorporated into the UI:

• 'Take Me Somewhere' → 'Go Somewhere'

• 'Goal' → 'Destination'

• 'Your Info' → 'Your Flight'

• Add personal messages such as 'Enjoy your flight, {name}!'.

• Add a comment on the 'Where' page which informs the user that they will be able to change their mind on the destination.

• The airport help desk should be a destination option.

3. Improvements

We learned from our testing that we took for granted intuitive understanding of common design elements.

Many features of our UI weren't confusing for us (the team) or our peers (others on the course), but they

were for the older people we tested on - those who aren't as fluent with technology. So we decided to make

the following changes to help even the most technologically illiterate understand IAN and to use the system with confidence:

• A 'Welcome to IAN' screen and two short introduction screens to familiarise new users with IAN.

• A friendlier message asking users to scan their boarding pass, and introducing the functionality of the help and exit buttons.

• Calling the user by their name once their flight details have been retrieved, and wishing them a safe flight once their journey

is over makes the system less intimidating and more personal.

• Changing the 'Take Me Somewhere' button to the clearer 'Go Somewhere'.

• Renaming 'Your Info' to the 'Your Flight'.

• Warning the user IAN will begin moving once they confirm their destination.

• Changing the 'Pause' button to one that uses the word pause instead of the pause symbol for more obvious functionality.

• Always showing the 'New Goal' button to the user.

• Providing confirmation to the user once they have reached their destination.

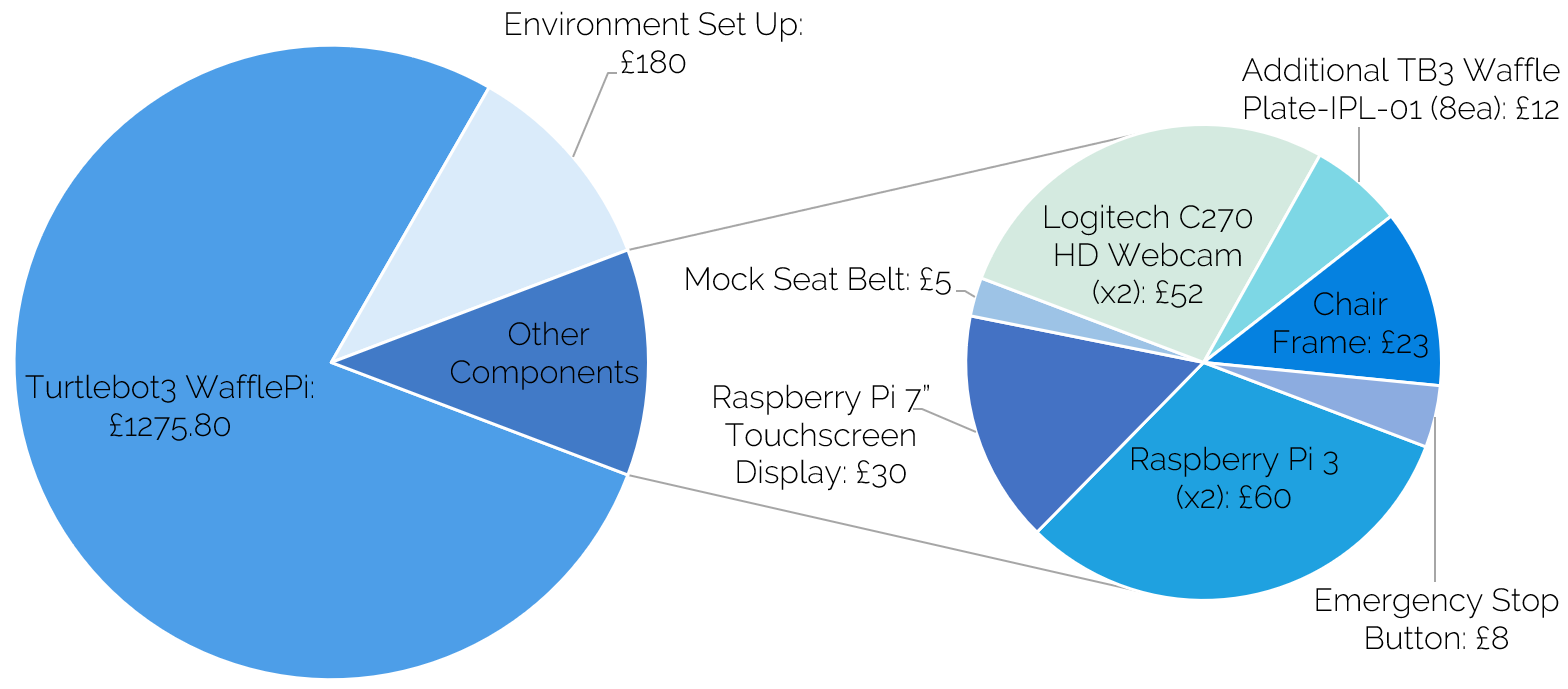

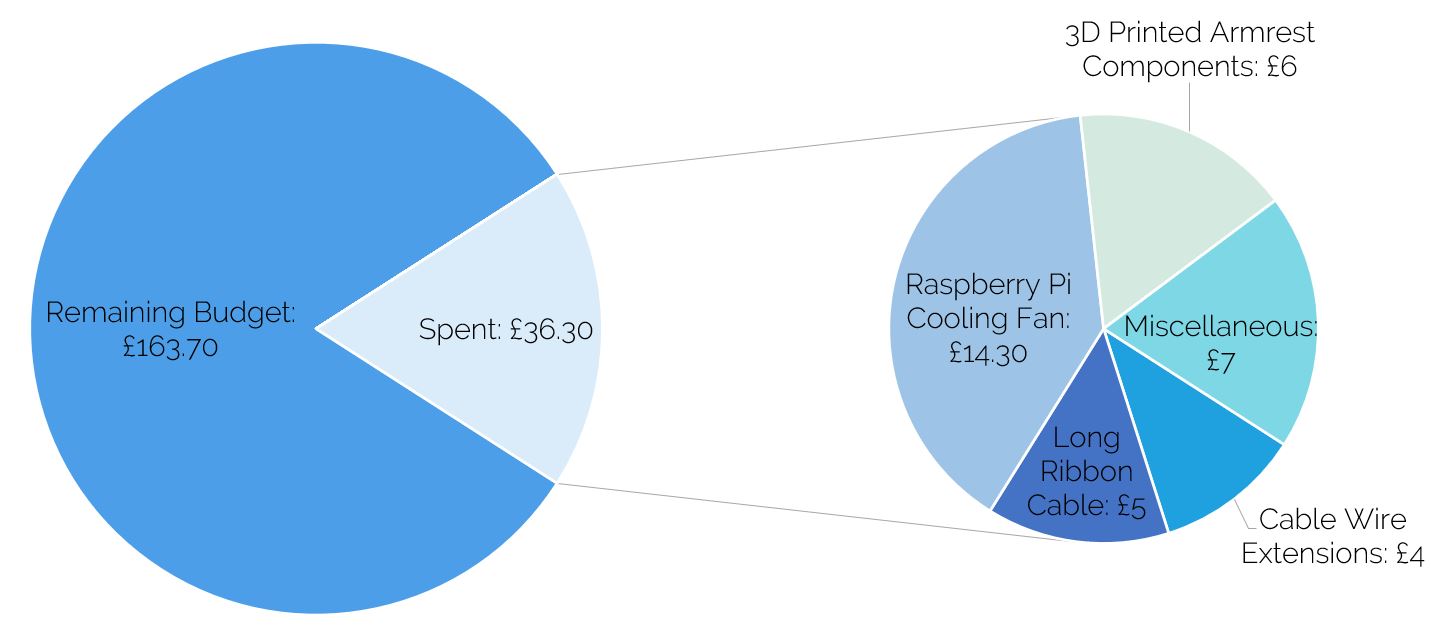

Current System Costs

The total cost of items borrowed in the making of the system is £1,645.80.

The breakdown of the borrowed items' costs is shown in by the following pie chart.

Our externally purchased items' budget is £200. The total cost of our

externally purchased items is £36.30, and is broken down by the following piechart.

For our final demo, we planned on including a footrest for the passengers, as well as focussing on aesthetics such as covering for the armrests, cushioning for the chair and wire concealment. We also planned to include text-to-speech features and warning lights to alert other passengers who may come in the way of the system. We estimate that this would cost an additional £20 - £25.

Technician Budget

Given a budget of 10 hours, we estimate we spent 4.5 hours collaberating and working with the technicians. Broken down, this includes:

• Printing 3D components: 1 hour.

• Cutting wood and drilling holes for chair frame: 1 hour.

• Meetings, discussions, and further assistance: 2.5 hours.

Business Plan

1. Current Human Service Cost

We start by making a few assumptions about the market:

• According to the UK Civil Aviation Authority,

there was a record number of 3.7 million requests for assistance at UK airports in the latest year.

• We presume it takes one airport staff member a working hour to fulfill such an assistance request.

• According to Glassdoor, British Airways' customer service staff on the ground make between £10-13/hour. We will presume it's £15/hr for the sake of making a safer estimate.

We presume that our product can only fulfill half of those requests, which are 1,850,000 requests a year. Given those assumptions, we estimate the cost of responding to those 3.7 million requests to be 1,850,000 x £15 = £27,750,000.

2. Product Units Estimate

We now have to make assumptions about the units of the product we would produce per annum.

Considering there are 3.7 million mobility assistance requests per year (365 days), that amounts to around 10,136 such requests a day.

We made the assumption here that our product can only cater to half of those, so 5,000 requests a day.

If we also presume a completely uniform distribution of those requests on the 24 hours of the day, we arrive at 208 requests per hour.

To recap, that is 208 mobility requests that our product can fulfill per hour around the UK.

Therefore, it makes sense to produce 208 units in the first year and release 208 of the enhanced version of the product each following year.

3. Deployment Costs: Fixed Costs

Staff Costs:

• FTE 1.0 – CEO (strategy/governance) – Salary Costs = £35,000 pa.

• FTE 1.0 – CTO (technical) – Salary Costs = £35,000 pa.

• FTE 1.0 – Engineer (robotics) – Salary Costs = £30,000 pa.

• FTE 1.0 – Software Developer – Salary Costs = £30,000 pa.

• FTE 1.0 – Sales Manager – Salary Costs = £25,000 pa.

• Employers contributions (for staff, e.g., pensions, bonuses, employers NI, appreciation about 20% of staff costs): £26,000 pa.

Lease / Rent / Office Space Costs: £20,000 pa.

Equipment Lease (e.g. long-term equipment for building robots etc.): £15,000 pa.

Insurance (liability, indemnity, employers, buildings cover, contents & stock, legal expenses, theft, criminal damages, etc.): £2,000 pa.

IT (software, computing equipment, data storage, etc.): £15,000 pa.

Therefore, we project our fixed costs to have a rough total of £233,000 per annum.

4. Deployment Costs: Variable Costs

Inbound Logistics - materials required for robots:

• Motorized wheelchair: £600-£2000.

• Motherboard with CPU unit, fan and the display screen: £600.

• Barcode Scanners: £15-29.

• Light/weight sensors: £2-7.

• LIDAR sensor £146.

• Total for each unit: £1360-2780.

• We will take the total for all units (208) to be the upper bound of all the costs above.

• Therefore, the inbound logistics total is £2780 x 208 = £578,240 per annum.

Operational Costs - production costs:

• N/A as it's covered in the fixed costs.

Outbound Logistics - delivery/handling costs:

• Looking at other companies' delivery costs of equipment of similar weight,

we project the delivery and handling costs to be around £40 per unit, which's a fairly cruel estimate.

• Accounting for the delivery of 208 units, the outbound logistics total comes to around £40 x 208 = £8,320 per annum.

Customer Services - labour costs:

• We are assuming that our product will have a failure tolerance of 2%, meaning

that out of our 208 units, 4-5 will be faulty and need replacement or reimbursement. We will take that number to be 5.

• Therefore, the total Customer Service costs for is 5 x(£2780 + £40) = £14,100 per annum.

Therefore, the total variable costs per annum are projected to be £600,660.

5. Revenue Streams

To recap, our total costs are around £833,660 per annum.

This is only around 3% of the £27,750,000 cost of human assistance.

We first assume the purchasing option would be narrowed down to purchasing each unit independently,

at least to begin with. Given the extensive use we predict for the product and the high safety standard it has to hold,

we assume a shelf life of 1 year.

Since our total costs per annum are estimated to be

around £833,660 assuming the production of 208 units (i.e. £4,007 per unit),

we will set the price of the first purchase to be £5,500 per unit.

If the customer wishes to purchase the product again in the following year,

we ask them to return the used product back to purchase the new product for a price of £5,000 per unit.

We assume the disparity of that £500 is made up of recycling or using parts of the used product.

Assuming the sale of 208 such units a year,

the revenue stream should be around £1,144,000 per annum.

This makes for a profit of roughly £310,340 per annum.

6. IAN's Cost vs Human Cost

Assuming the 208 unit sale per year, the overall customer cost

would be around £1,144,000 per annum which is only 4% of the

human cost per annum. That means a reduction of special assistance related costs of 26.6 million.

Team Members and Contributions

Navigation

Co-managed the team: responsible for task allocation and planning. Lead the navigation team, and was responsible for the navigation functionalities and ROS programming. Co-developed the promotional website.

DB/Scanning

Co-managed the team. Contributed to the writing of technical reports and the userguide, website development, the early steps of navigation scripting, and the building & integrating of the database and scanning functionalities.

UI

Backend developer for the UI. Wrote the UI scripts, helped design the UI and integrate it with the other subsystems, carried out user testing, and also demo-ing the project.

Appearance

Member of the appearance team. Tasked with designing how the system would look and implementing such designs. Also contributed to the writing of reports, the user guide and slides for demonstrations.

Appearance

Member of the appereance team. Worked to design and develop how the system would look like. Also worked on setting up the development environment and provided technical assistance when needed.

Navigation

Member of the navigation team. Helped with creating scripts for navigating and pausing functionalities. Also worked on integrating the navigation with the UI.

DB/Scanning

Member of the DB/Scanning team. Worked on developing the scanning scripts, the live updates functionality and making the Database. Also integrated the scanning and live update functionalities with the UI.

Navigation

Contributed to running the evaluation tests and analysis as well as presentations. Also contributed to user testing by managing the ethics application.

UI

Member of the UI team. Designed the UI and helped integrate some of the UI's features with other parts of the system such as live updates and navigation functionalities.

Appearance

Contributed to the database setup, evaluations, research and writing of the technical reports and the user guide.

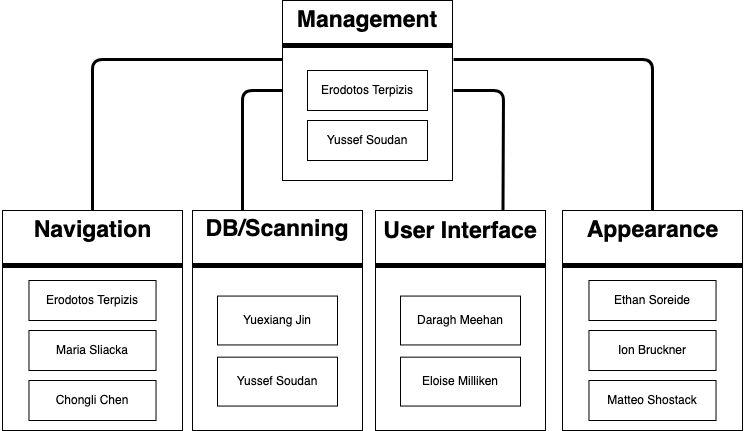

Team Organisation

Agile Development

Due to the nature of this course and its assessment structure, which included product demonstrations every 3 weeks, we decided to go with an agile development methodology. This method is based on iterative development,where requirements and product enhancements are influenced by regular feedback from the expert team.

Trello

For our agile development management, we decided to use Trello.

Using Trello, individuals were assigned tasks with specific deadlines.

We used "To Do", "In Progress" and "Done" boards to keep track of the status of each task.

Communication

We decided that the main medium of communication should be Slack as

it provided us with the option of creating channels for sub-groups within the

team for better communication as well as having different channels

for different content (e.g meeting related).

Weekly meetings

Team meetings and meetings with our mentor took place on a weekly basis.

The meetings were co-lead by our managers and their purpose was to keep the

team updated on the progress of the product. In the meeting, each team would

present their completed tasks and then we would break down the milestones of

the sprint into tasks, and would arrange ourselves into teams depending on the

ongoing tasks. Most teams remained the same across various sprints and some teams

were rearranged when needed. During the meetings, our Trello meeting would be updated to keep track of in-meeting decisions.

Code Repository

We decided to use GitHub for our code-sharing and version control so that

each team member could have access to the product's code. For example, the User Interface

team had their own branch to tests their potential features before merging with the main branch.

SCRUM

To keep every member of the team up to date with other members' work, the team had daily

scrums over Slack. The 'scrum window' lasted for 2 hours. In this window, each member

wrote what they had done since the last scrum, what they were currently working on,

and what they'd work on next. Daily scrums allowed all members of the team to be aware

of problems immediately, instead of delaying problems to the weekly meetings.

Nuclino

At the end of each sprint, each team wrote a Nuclino page

with instructions on how they accomplished their tasks and

problems they faced, alongside their solutions and likely causes.

If a member of the team became unavailable at any

point in the future, other members of the group could pick up

where they left off by following the steps they documented.

Team Structure

Sprints & Accomplished Milestones

| Sprint 1 Milestones | Team |

|---|---|

| The physical environment for the robot is set up, and the Turtlebot can be moved manually. • Made a map of the environment using SLAM. • Could move the Turtlebot using RViz manually by pointing to a destination. |

Navigation, Appearance |

| The robot can scan a boarding pass in the form of a QR/Barcode code. • Built and populated an early version of the DB locally. • Obtained a camera that is able to auto-focus on the codes. |

DB/Scanning |

| Sprint 2 Milestones | Team |

| The user can interact with the robot using an interface (UI) on a display screen. • A screen is installed and the user interface is created. • The UI is integrated with the navigation functionalities. |

UI |

| The robot can move from any point in the airport to a predefined point, such as a gate, automatically. • Coordinates of destinations in the map are stored on the DB. • Could move the Turtlebot automatically to a destination by running a Python script. • By clicking a button on the UI, the Turtlebot can move to a pre-defined destination on the map. |

Navigation, UI, DB/Scanning |

| The robot avoids static obstacles while moving in a smooth manner. • Changed the navigation parameters for the obstacle avoidance to be more smooth. |

Navigation |

| The foundations of the user seat are built (i.e. the frame). • The foundation of the seat is built using wood and metal frames. |

Appearance |

| Sprint 3 Milestones | Team |

| The robot can take the user to restaurants, toilets and other points of interest. • More destinations are added to the map, stored on the DB and integrated with the UI. |

Navigation, UI, DB/Scanning |

| The UI is further enhanced for increased accessibility. • Text sizes are revised to accommodate for the target group. • Colours are revised to accommodate for colour-blind users in the red-green spectrum. |

UI |

| The database is setup on a remote server. • The database is setup on the 'Server Pi' to imitate an airport server. |

DB/Scanning |

| The user can receive live updates on their flight status. • When any of the user's flight information is altered on the DB, the user recieves a pop-up on the UI. |

DB/Scanning, UI |

| The user can pause the navigation process via the screen. | Navigation, UI |

| The user can see a live, dynamic map which tracks their position on the airport’s map. • The live-tracking feed shows the user's, and hence IAN's, position on the map as it changes as well as their chosen destination on the map. |

Navigation, UI |

| The seat has an armrest and a seat belt. | Appearance |

| Research the scalability of the navigation and the database. • The navigation team researched other navigation algorithms suitable for the current hardware to make for safer and more efficient journeys. • The DB/Scanning team researched other methods for server-client communication for enhanced security, such as TCP sockets. |

Navigation, DB/Scanning |

| Sprint 4 Milestones | Team |

| Writing the user guide. • Each subteam wrote a page listing the setup instructions for their component. • Three members of the team wrote the actual user guide by compiling those pages. • The UI team designed the user guide cover. |

All teams |

|

Making the promotional and system demonstration videos. • Different members of the team worked on the making and revision of each video. |

All teams |

| Making the website. • Each subteam wrote the relevant subsection for their component. • The managers designed the website and compiled the information written by the team. • The UI team designed the banner. |

All teams |